The human brain is very good at recognising patterns in large sets of data, but artificial intelligence, or AI, is catching up. The ability of computer algorithms to identify features in vast datasets continues to improve, and astronomy is one discipline in which artificial intelligence is really starting to take hold.

The AIs that do this are called deep neural networks, or deep nets, and they replicate the way a human brain solves problems by using a collection of artificial neurons that receive and process information like those found in the human brain.

Deep nets also mimic the human brain in another key regard: they can learn. Given the right algorithm, a deep net can learn the photometric features of an exoplanet passing in front of its star by processing millions of light curves in a matter of seconds.

As astronomers face an explosion in the amount of data generated from the next wave of telescopes that will soon to begin their observations, this ability will make AI a crucial tool for astronomers.

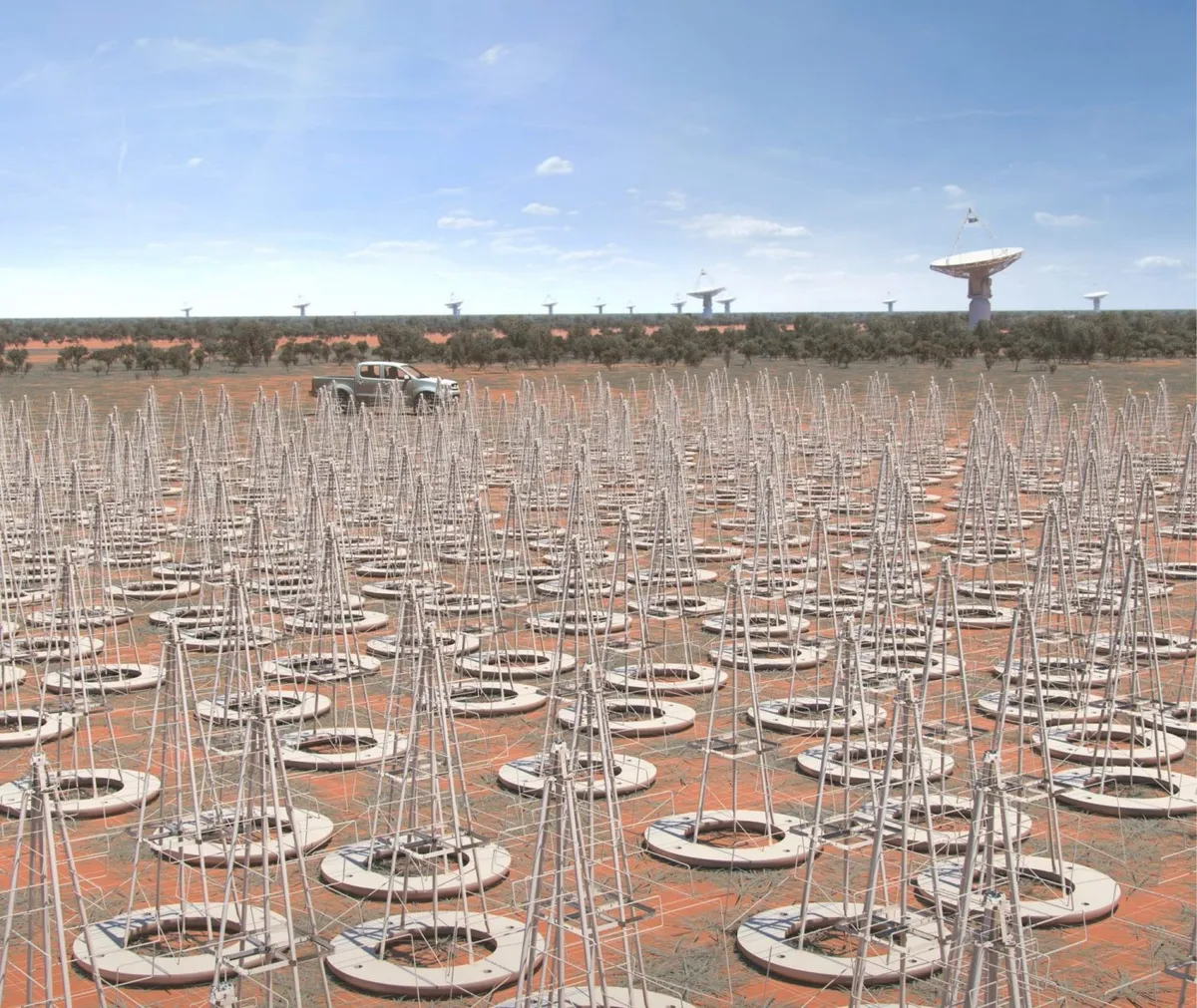

The Square Kilometre Array (SKA) is part of this next wave and promises to help usher in a new epoch for radio astronomy when it begins observations in 2020.

SKA’s central computer will have processing power equivalent to one hundred million PCs and if the data it will be able to collect in a single day were turned into songs, it would take almost two million years to play back on an iPod.

Seeking out exoplanets with AI

Exoplanet research is in a period known as the ‘era of big data’. As ground- and space-based telescopes become more powerful and sensitive, the data they generate is increasing at an almost unmanageable rate, resulting in the need for more efficient ways to analyse it.

And there is more data on the way. Find out more in our guide to the latest exoplanet missions.

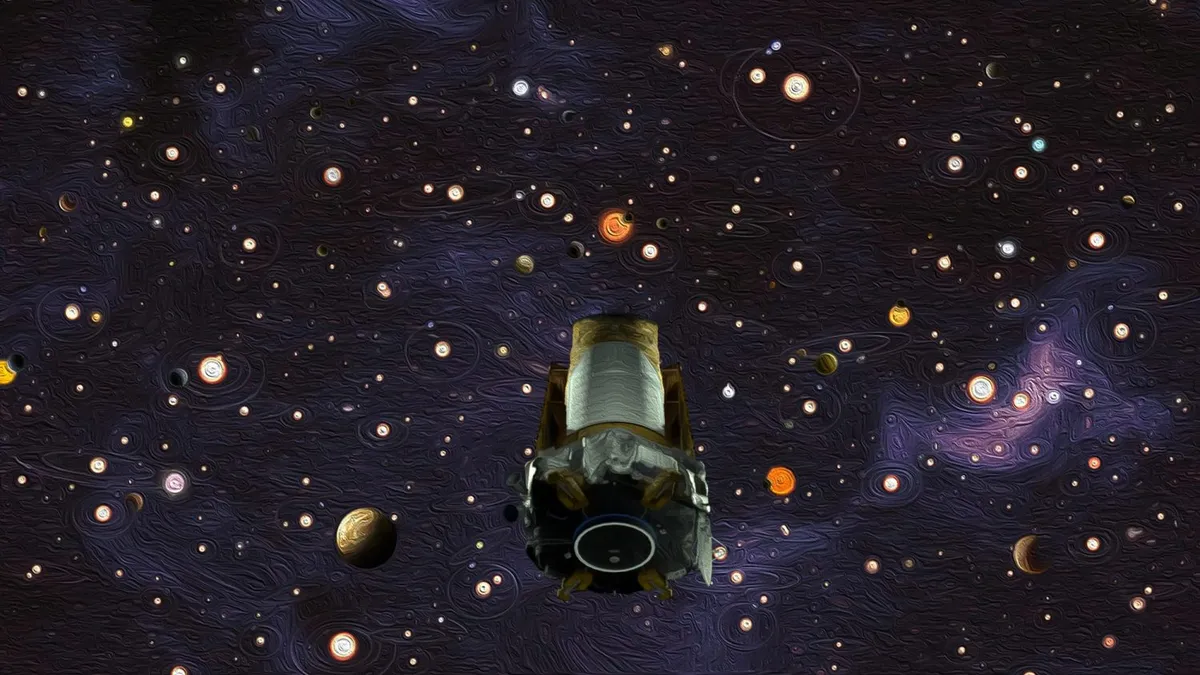

Currently one of the biggest datasets belongs to NASA’s Kepler Space Telescope, which searched for exoplanets by measuring the minute dips in the brightness of 170,000 stars as planets pass in front of them.

Kepler’s observations generated a phenomenal amount of raw information, which, at the time of writing, has led to the discovery of 2,662 confirmed exoplanets.

Kyle Pearson is an exoplanet hunter who has developed an AI to help search for worlds outside our Solar System.

During his first year studying Applied Physics at Northern Arizona University, Pearson was asked by his professor to apply what he’d learnt to his current research.

"I was already studying the characterisation of exoplanet atmospheres," he says, "so it was only natural to make the jump to exoplanet detection."

Pearson developed a deep net capable of scanning through data to detect exoplanets. It tries to learn what the potentially planet-signifying dips in star brightnesses should look like based on previous examples.

One of the big advantages of deep nets is that they can be trained to identify very subtle features in large sets of data.

RobERt (Robotic Exoplanet Recognition) is a deep net created by Dr Ingo Waldmann and his team at University College London.

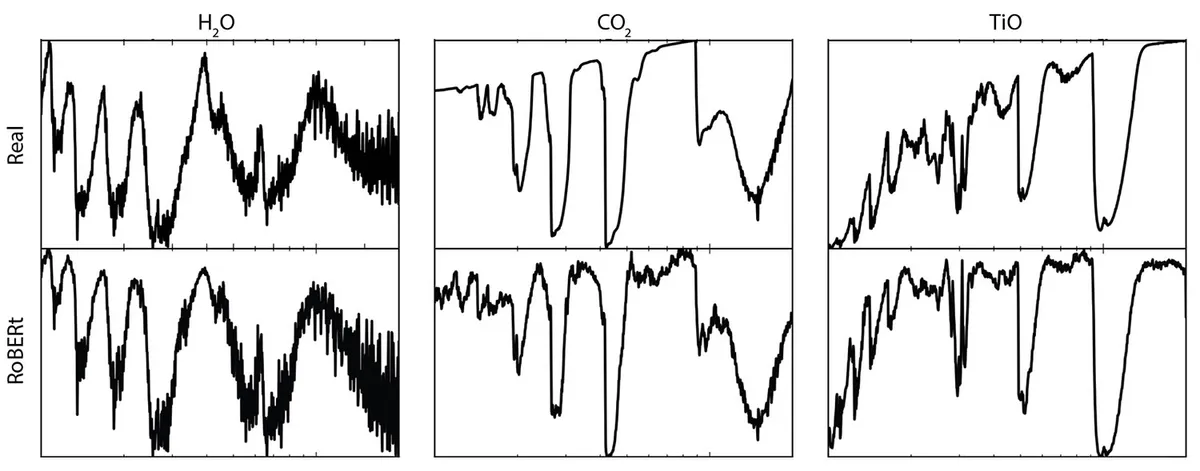

Using over 85,000 simulated light curves from five classes of exoplanets, they ‘taught’ RobERt to recognise the presence of particular molecules and gases in exoplanets’ atmospheres.

After the teaching was completed, RobERt was able to identify molecules such as water, carbon dioxide, ammonia and titanium oxide in light curves from real exoplanets with 99.7% accuracy.

It would take traditional atmospheric modelling approaches days to pick out this information from the masses of data collected by exoplanet observations. RobERt can do it much faster.

But to check RobERt had really ‘learnt’ how such compounds affect the light coming from exoplanets, Waldmann’s team reversed the process and asked RobERt to predict the shape of light curves for various substances, which it did with impressive precision (see below).

RobERt is currently being used to model exoplanet data from the Hubble Space Telescope and to analyse predicted observations of the James Webb Space Telescope and the European Space Agency Ariel mission candidate.

Discovering new stars

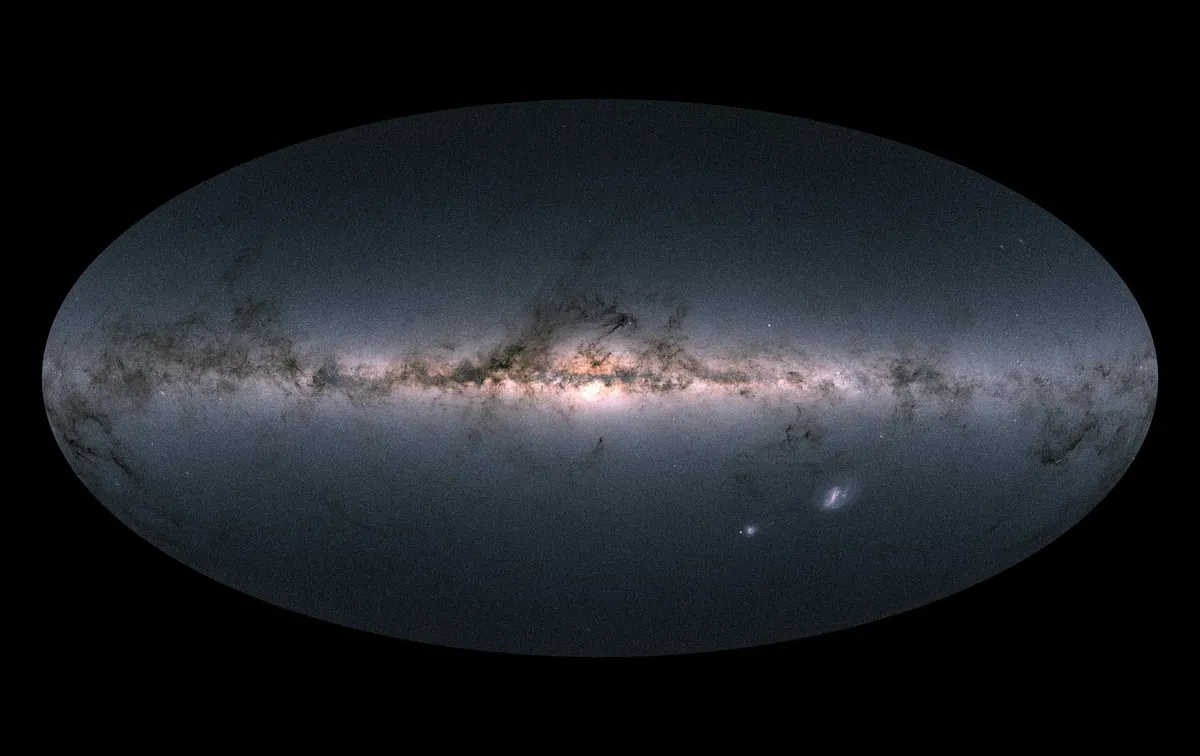

ESA’s Gaia satellite, a space observatory designed to chart the biggest 3D map of the Universe to date, has also benefitted from AI analysis.

In June 2017, 6 hypervelocity stars – a new class of star propelled by a previous interaction with a supermassive black hole – were spotted in Gaia’s observations zipping from the centre of the Milky Way to its outer regions.

The stars were found with the help of an AI network that analysed the data Gaia gathered.

The AI network was developed by Tommaso Marchetti, a PhD student at Leiden University in the Netherlands.

"We chose to use an artificial neural network for two main reasons: its ability to learn highly nonlinear functions to identify interesting objects and to generalise input data not encountered during the training," Marchetti says.

One thing is for sure – the future of astronomy is data-rich. As our telescopes become more sensitive and powerful, the information that will be beamed back for analysis will be abundant.

But whether it will be humans or computer algorithms that discover the next Earth-like exoplanet – or perhaps even our species’ next home – only time and technology will tell.

From Google to galaxy clusters

AI algorithms used by Facebook and Google have been employed by astronomers to study a phenomenon that Albert Einstein proposed in his theory of general relativity over 100 years ago.

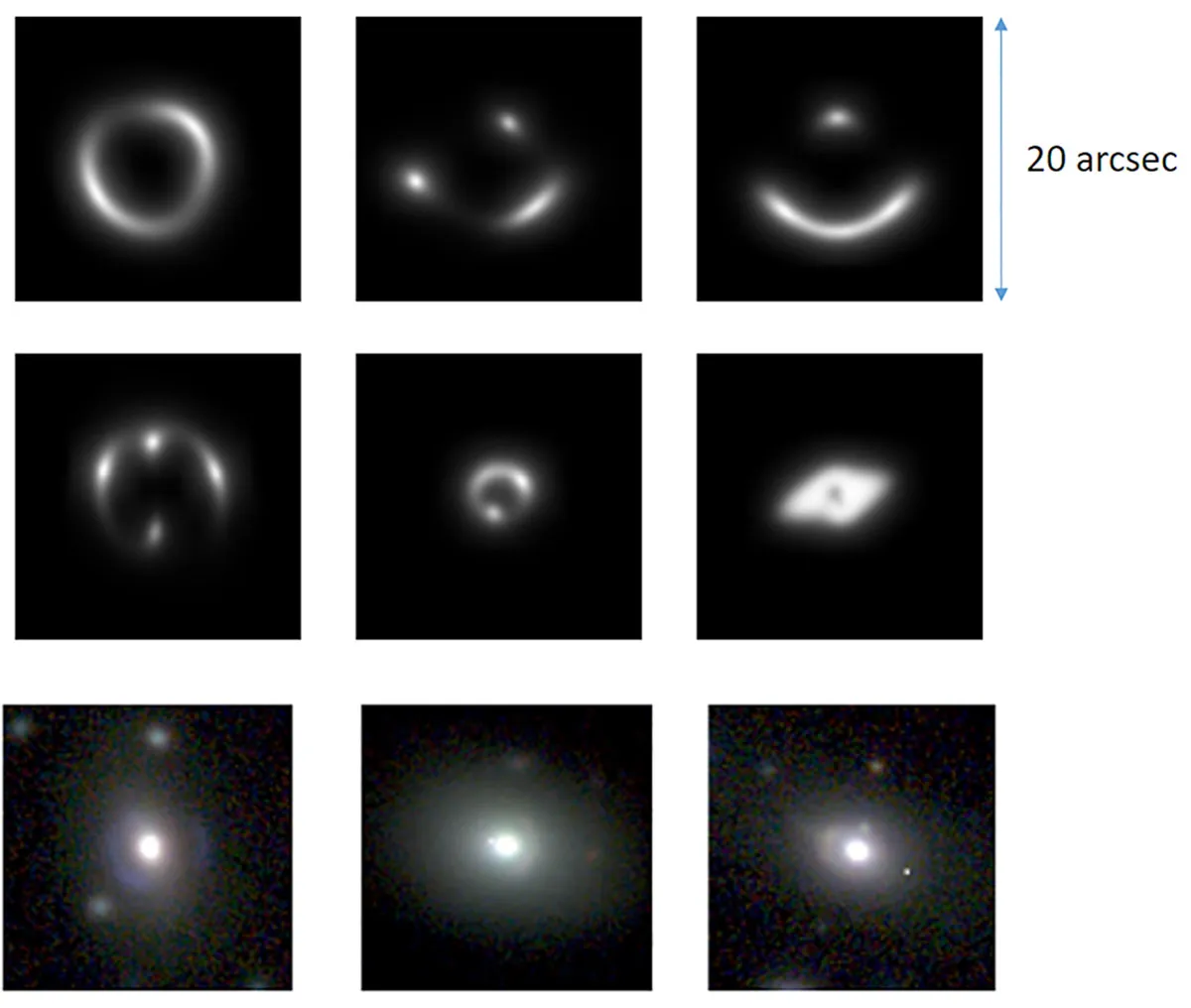

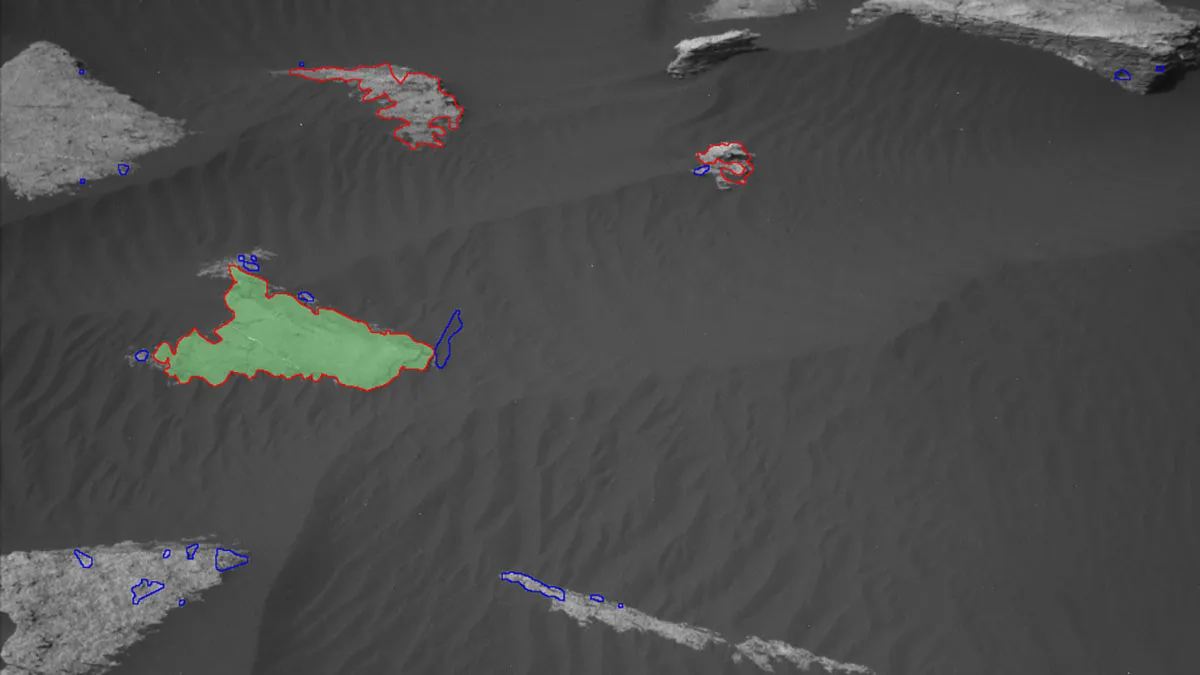

In October 2017, a team of astronomers from the universities of Groningen, Naples and Bonn developed a method of detecting gravitational lenses using the same AI as the social network and search engine giants.

Gravitational lensing is an effect caused by an enormous mass warping spacetime: massive objects such as galaxy clusters can be used as a sort of cosmic magnifying glass to observe more distant objects.

The AI algorithm the astronomers used is called a convolutional neural network and works on the same formulae employed by Google in 2017 to win a game of Go against the world’s best human player.

Facebook uses the same algorithms to compile data on the images that appear in its users’ timelines.

The astronomers trained their AI using millions of images of gravitational lenses.

Normally, human astronomers would examine all the images to look for potential candidates, but the AI was able to find 761 examples of gravitational lensing independently in a patch of sky 22 square degrees across – just over 0.5 per cent of the total area.

Artificial intelligence on Mars

Artificial Intelligence on the Mars Curiosity rover has been helping it find targets for its ChemCam laser. The laser is used to vaporise small sections of rock or soil, so it can study the gas that burns off and send the data back to NASA scientists on Earth.

But rather than NASA’s rover team having to select the targets for the ChemCam remotely, an AI system known as Autonomous Exploration for Gathering Increased Science (AEGIS) that’s on board Curiosity takes care of the target selection process.

This AI software gives Curiosity the ability to seek out interesting features for itself.

Each day, Mission Control programs a list of commands for the rover to execute based on the previous day’s images and data.

If those commands include travelling to a different location, the rover may reach its destination several hours before it is able to receive the instructions about its targets.

AEGIS allows it to autonomously zap rocks and collect data for scientists to investigate later, allowing them to concentrate on other tasks.

Alex Green is a science writer based in Peterborough. His areas of expertise include black holes, astrobiology and space exploration.

This article originally appeared in the January 2018 issue of BBC Sky at Night Magazine.